#include <CalibrationClasses.h>

Public Member Functions | |

| DetectorCalib () | |

| virtual double | applyCalib (double a_value) const =0 |

| virtual DetectorCalib * | clone ()=0 |

| virtual double | invertCalib (double a_value) const |

| virtual std::ostream & | print (std::ostream &a_stream=std::cout) const |

| prints nothing by default More... | |

| CalibType | getCalibType () |

| void | setTime (uint32_t a_t) |

| sets the experiment time during the processing in time since epoch More... | |

| void | setTDiv (int a_timeDiv) |

| sets the divisor for which to consider the given time More... | |

| virtual | ~DetectorCalib () |

Protected Attributes | |

| CalibType | m_type |

| uint32_t | m_t |

| int | m_tDiv |

Constructor & Destructor Documentation

◆ DetectorCalib()

| DetectorCalib::DetectorCalib | ( | ) |

◆ ~DetectorCalib()

|

virtual |

Member Function Documentation

◆ applyCalib()

|

pure virtual |

pure vitural function to return tha calibrated value which forces implementation by inheriting classes

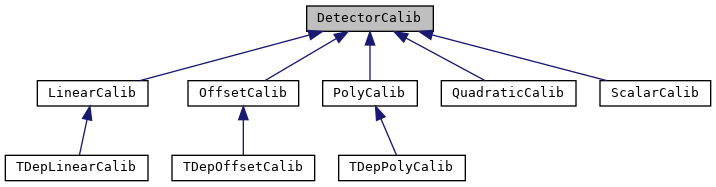

Implemented in TDepPolyCalib, TDepOffsetCalib, TDepLinearCalib, PolyCalib, QuadraticCalib, LinearCalib, OffsetCalib, and ScalarCalib.

◆ clone()

|

pure virtual |

this is required to implement deep copies of derived classes and needs to be implemented for any inheriting class

Implemented in TDepPolyCalib, TDepOffsetCalib, TDepLinearCalib, PolyCalib, QuadraticCalib, LinearCalib, OffsetCalib, and ScalarCalib.

◆ getCalibType()

| CalibType DetectorCalib::getCalibType | ( | ) |

returns what type of implimentation has occured for runtime polymorphism

◆ invertCalib()

|

virtual |

allows for the inverse mapping of a given calibration if needed for applying cuts. implementation isn't enforced by default so the base class implimentation will return -10000;

Reimplemented in LinearCalib.

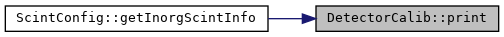

◆ print()

|

virtual |

prints nothing by default

Reimplemented in TDepPolyCalib, TDepOffsetCalib, TDepLinearCalib, PolyCalib, QuadraticCalib, LinearCalib, and OffsetCalib.

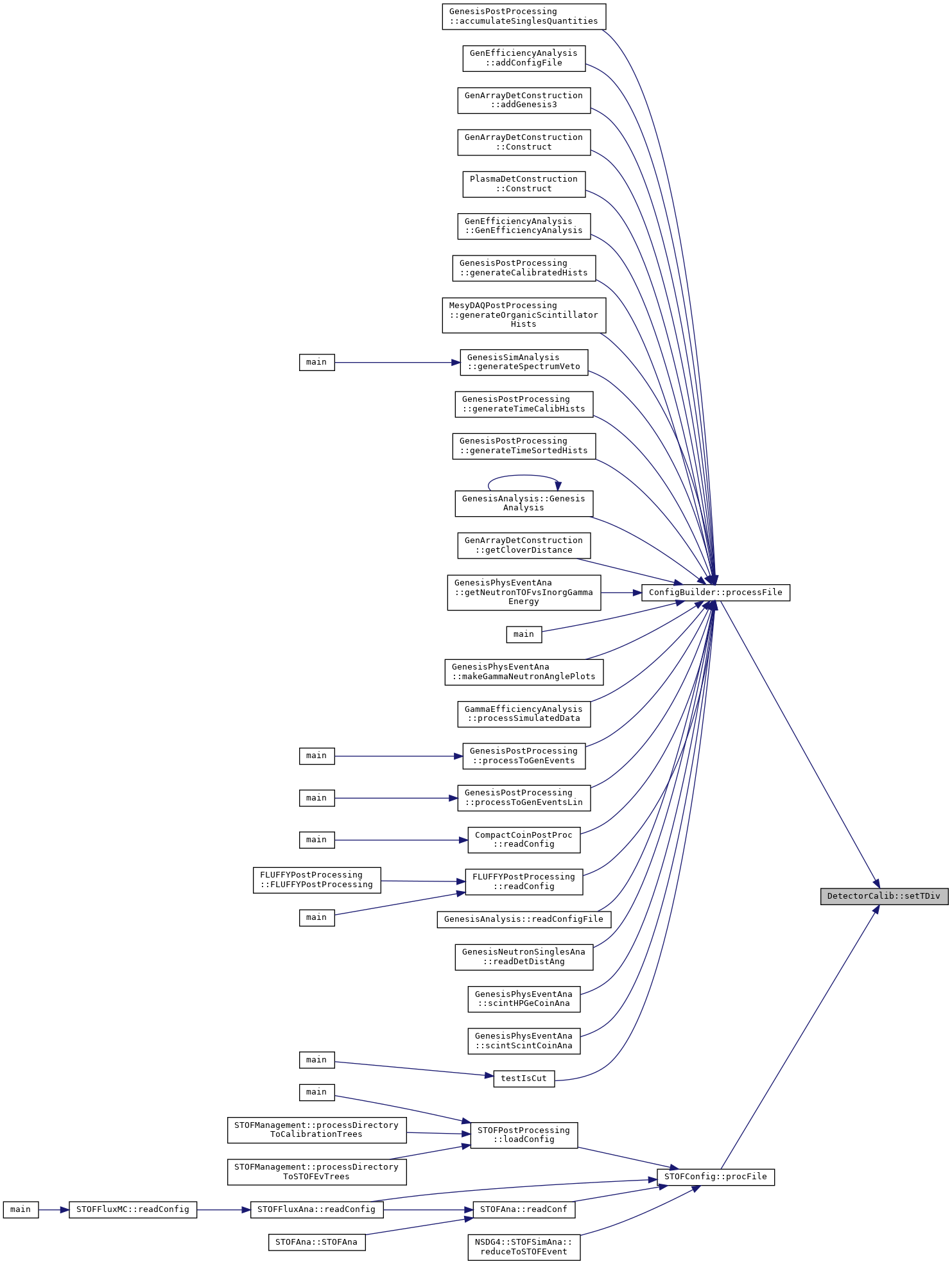

◆ setTDiv()

| void DetectorCalib::setTDiv | ( | int | a_timeDiv | ) |

sets the divisor for which to consider the given time

◆ setTime()

| void DetectorCalib::setTime | ( | uint32_t | a_t | ) |

sets the experiment time during the processing in time since epoch

Member Data Documentation

◆ m_t

|

protected |

◆ m_tDiv

|

protected |

◆ m_type

|

protected |

The documentation for this class was generated from the following files:

- BasicSupport/include/CalibrationClasses.h

- BasicSupport/src/CalibrationClasses.cpp