Leading accelerator facilities perform regional isotope production using high-intensity, high-energy (p,x) reactions (\(E_p \geq 100\) MeV) on stable targets, but the lack of proton data creates significant and inescapable uncertainties that shroud target design, optimal beam parameters, and contamination co-production knowledge. Production capabilities cannot be supplemented with theory since accurate modeling is notoriously difficult at these energies; only 8 datasets currently guide modeling for \(E_p \geq 100\) MeV, leading to observed differences between theoretical predictions and eventual measurements on the order of 50–100%.

The TREND results are therefore not only useful for contributing to the scarcity of high-energy proton-induced data but they also form a valuable tool to study nuclear reaction modeling codes and assess the predictive capabilities for proton reactions up to 200 MeV.

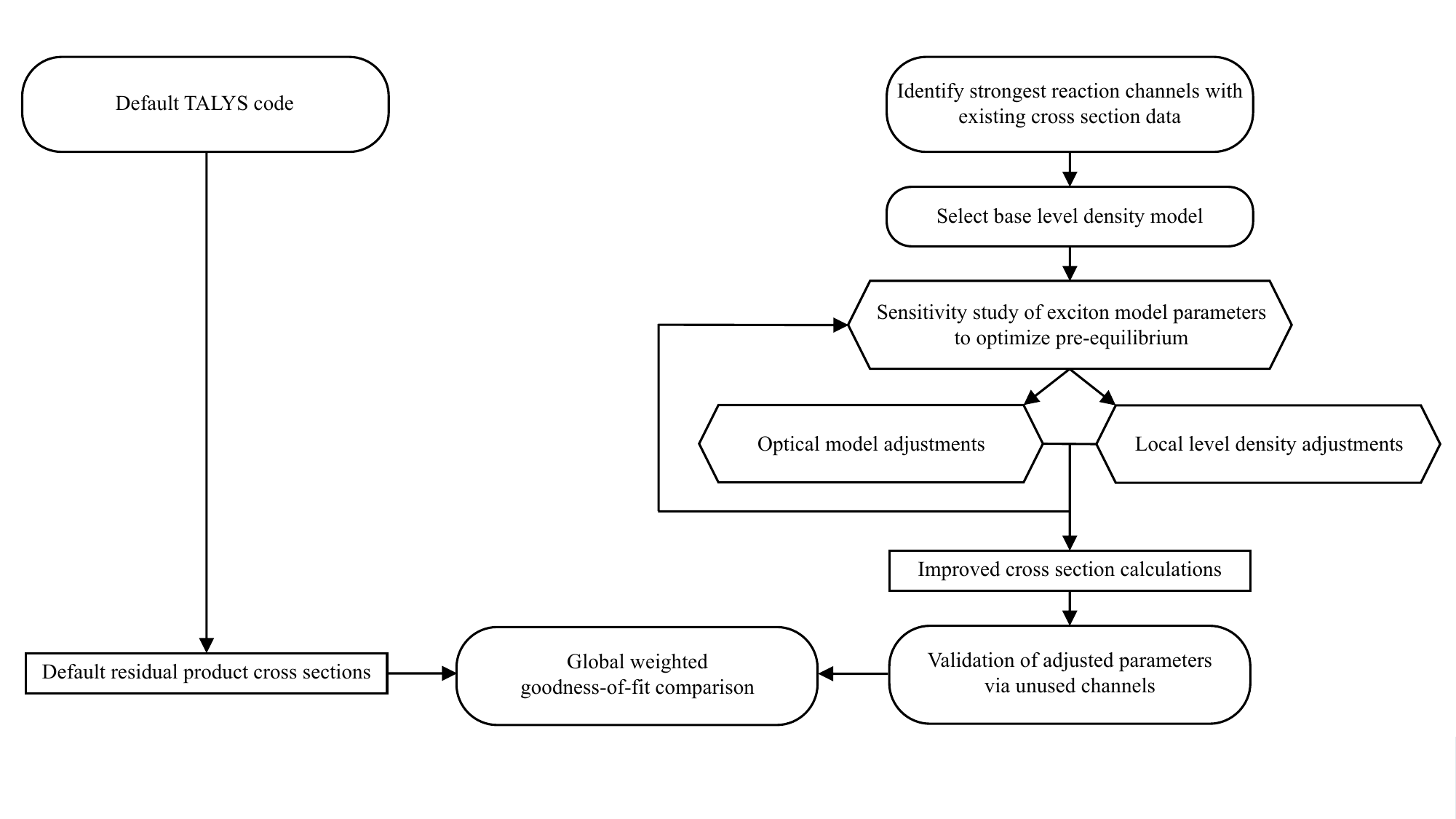

Our program has in turn developed a new data analysis methodology for high-energy (p,x) reactions. We have set forth a systematic procedure to determine the set of reaction model input parameters, in a scientifically justifiable manner, that best reproduce the most prominent observed reaction channels from an irradiation. This is our attempt to develop an early evaluation thought process for proton-induced reactions, with inclusion of a validation technique using cumulative production results, that could be an inspiration to the development of a charged-particle evaluated database in the future. Additionally, our methodology aims to improve an accepted approach in cross section measurement literature where too few observables are used to guide modeling parameter adjustments, thereby potentially subjecting the modeling to compensating errors. This is a unique merger of experimental work and evaluation techniques, providing insight into pre-equilibrium reaction dynamics and a host of nuclear data properties relevant to the accurate modeling of high-energy proton-induced reactions.

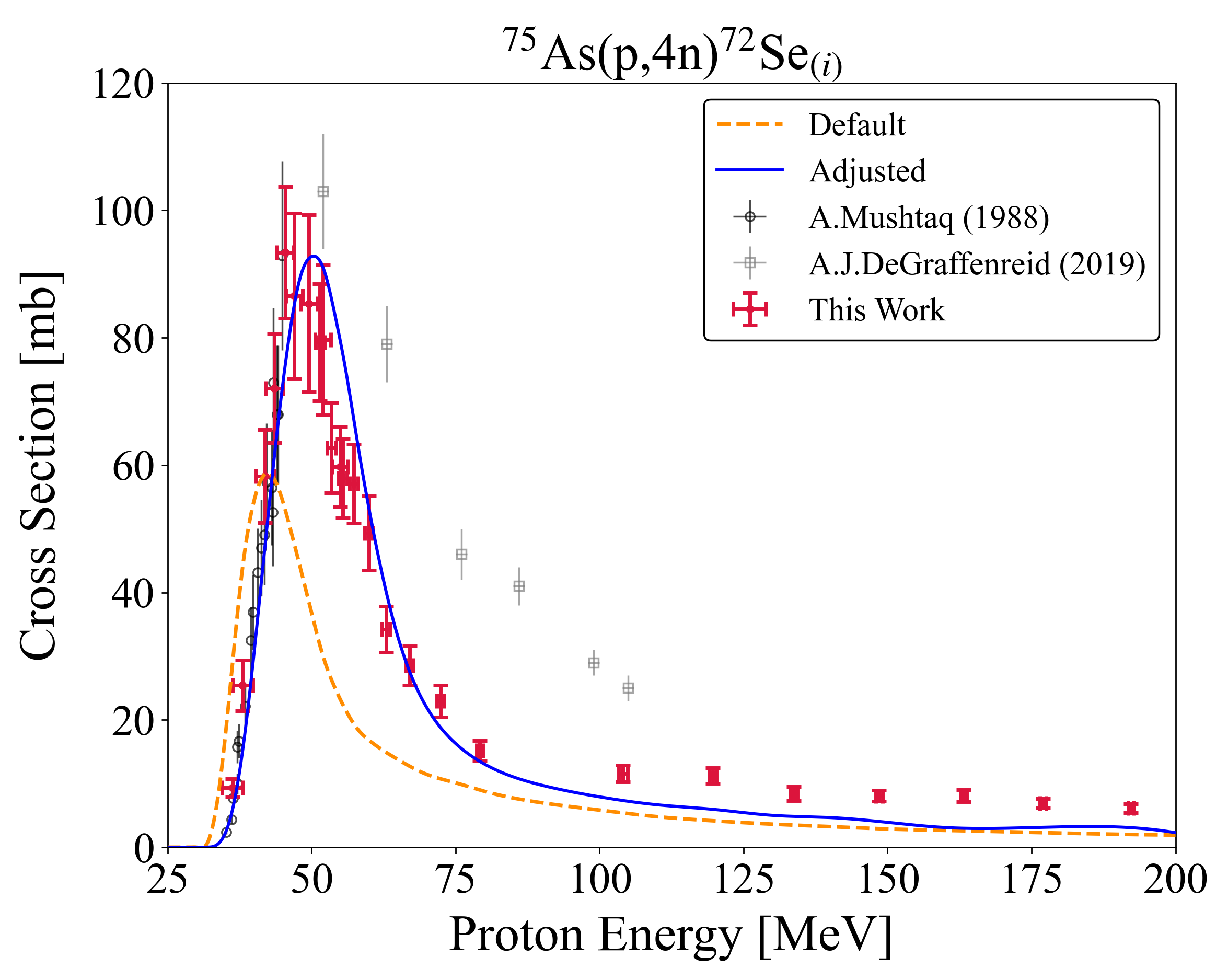

We utilize the TALYS nuclear reaction code to carry out the data analysis. TALYS has widespread use in the nuclear community, is an accessible code-of-choice for reaction cross-section predictions, and has an abundance of adjustable keywords to investigate and interpret residual product excitation function data. The procedure has to-date been applied to and developed from the extracted TREND niobium and arsenic cross sections, reaching global \(\chi^2\) modeling prediction improvements at the 2–4\(\times\) level.

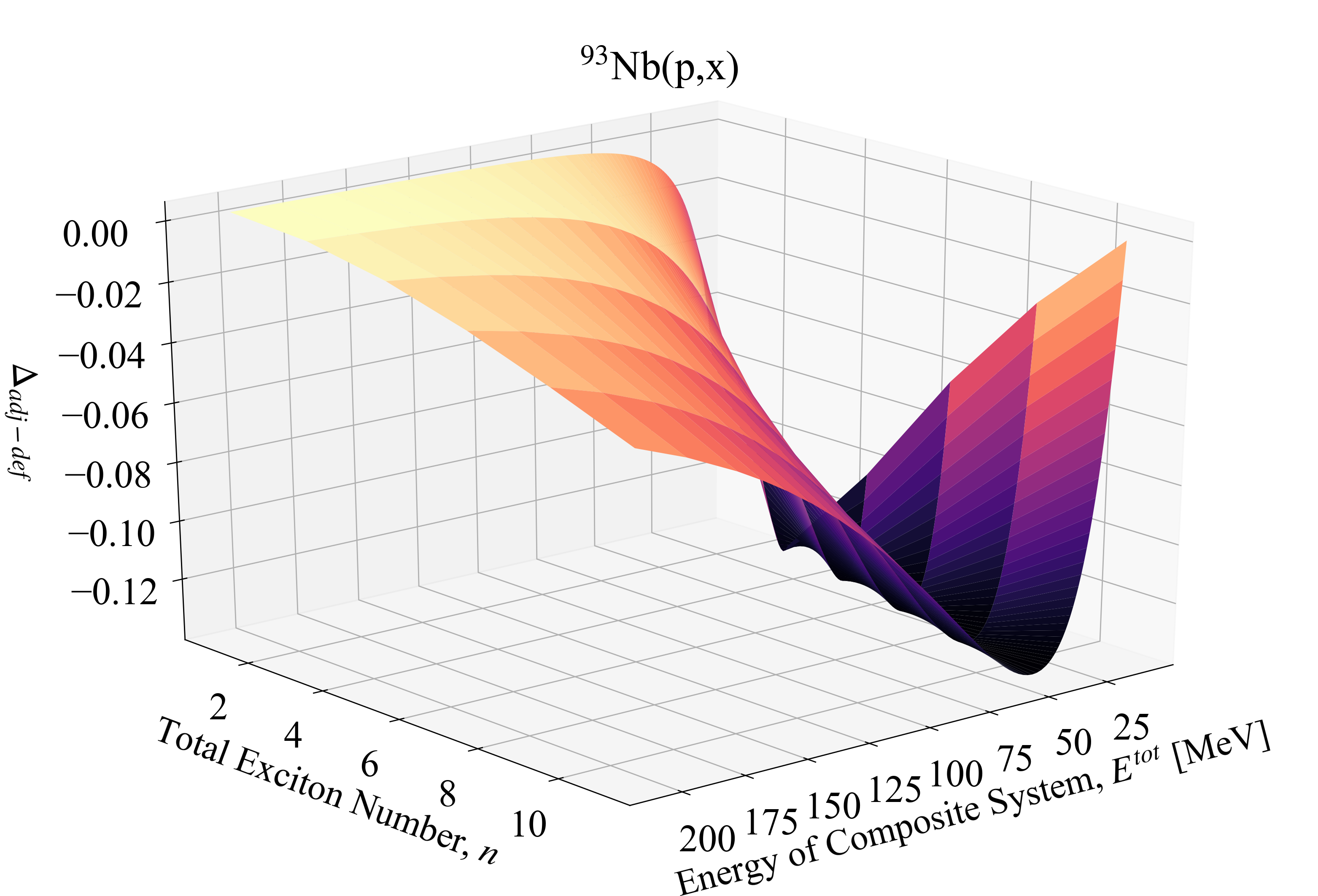

We used the methodology to conduct the first systematic study of the pre-equilibrium two-component exciton model intranuclear scattering rates. Findings of a consistent modification of exciton strength in the transition between the Hauser-Feshbach and exciton models suggest that global model adjustments may be required to act as a surrogate for better damping into the compound nucleus regime.

Our modeling framework is still in its nascent phase, founded on manual sensitivity studies. We look forward to continuing research into charged-particle modeling and the associated impacts of pre-equilibrium calculations. Some of that future work may include:

- A continuing investigation of the departure of equal exciton matrix elements for neutron-induced and proton-induced reactions by systematically studying one reaction channel across multiple targets.

- Expanding the procedure to include prompt-\(\gamma\) data, emission spectra, and double-differential data. Increased dataset diversity will help clarify effects between level density, optical model, and pre-equilibrium parameterizations.

- Improving coupled-channels optical model considerations for extending analysis to deformed target nuclei cases.

- Incorporating automation into the parameter sensitivity search, such as search techniques within a Bayesian framework, with the already acquired exciton adjustment knowledge. This would help to more accurately determine a global minimum for parameter optimization and to better express the resolving power of different parameters and channels in a more quantitative fashion.

This is an important introductory step for an area where no formalism or data existed, as the evolution of this type of thought process better aligns data work and evaluations as a necessary path forward.